Women around the world have been suffering from the spreading of Deepfakes and misuse of AI technology. Taylor Swift’s recent controversy has brought this pressing issue to the public’s attention and the best we can hope is that it speeds up the process of strengthening the AI laws.

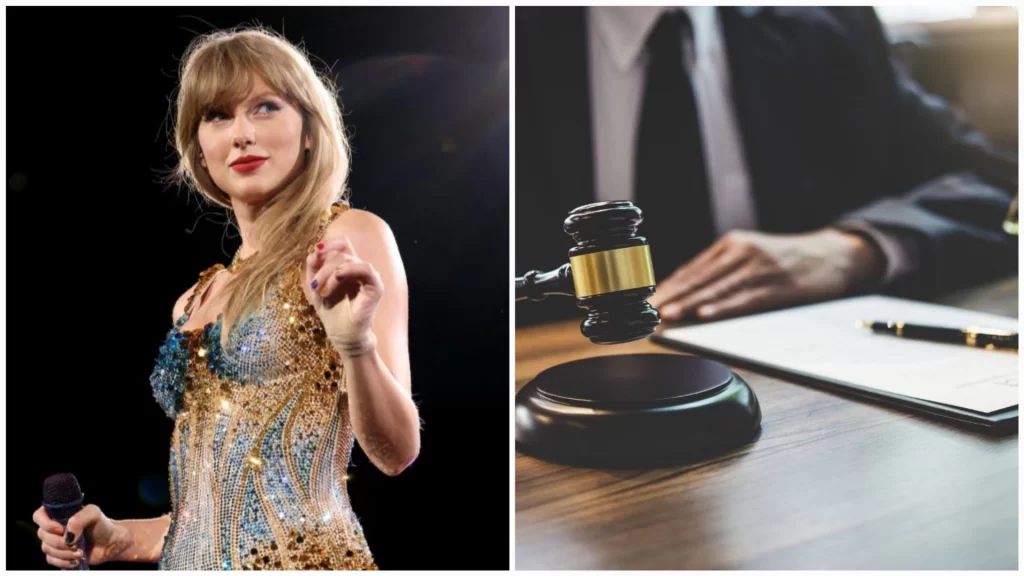

Taylor Swift is contemplating to take action against Deepfakes

In the past week, several obscene images of Taylor Swift dressed in Kansas City Cheif merchandise went viral after being uploaded on the notorious Celeb Jihad website.

i suppose we should be glad that taylor swift has inadvertently brought the danger of AI generated p*rn to public attention, as frustrating as it is that it took this long for people to care. here are more stories:

• male classmates made deepfake p*rn of over 30 female students… pic.twitter.com/vpW3ko1xmX

— mary morgan (@maryarchived) January 26, 2024

These images particularly become widespread on platform X (former Twitter) which is quickly becoming the hub of illegal and explicit content after its acquisition and layoff of hundreds of moderators.

A source close to The American singer shared that she is considering taking legal action against the creator and publisher of these “abusive, offensive, and exploitative” images. They further condemned the social media platform that allowed such images to exist and spread.

Additionally, stating that these images should be removed instead of being promoted. Swift’s circle of family and friends are furious “and every woman should be.” The source also called for laws preventing and punishing similar actions, the source said to DailyMail.

The state is against Deepfakes and misuse of AI

In the past year, many congressmen have stepped forward to pass laws regulating similar Deepfake and exploiting AI websites. Democrat Joe Morelle recently put forward a bill to outlaw similar practices.

This is going to get completely out of control…deepfake non-consensual porn…and the victims are almost ALWAYS women…too few laws to protect us. https://t.co/kIZbrSpDZK

— Amber Mac (@ambermac) January 26, 2024

New Jersey congressman Tom Kean, Jr. also expressed his concerns about AI technology in his statement noting it is advancing faster than the necessary guardrails. Before adding that the state needs to establish laws to combat the alarming trend affecting celebrities and citizens.

Currently, nonconsensual deep fakes are illegal in Texas, Minnesota, New York, Virginia, Hawaii, and Georgia. Illinois and California don’t have laws directly criminalizing the content but the victims can sue the creators on charges of defamation.

targeting deepfake porn: aka how to turn ur rage into action

the current legal framework protecting against deepfake pornography is patchwork at best. laws often lag behind changing technology. this thread will highlight what is being done about this issue and how you can help— chase (@not_chasebank) January 25, 2024

Advocates and legal experts have been calling for federal regulation that can provide uniform protection across the country. Bills such as the AI Labeling Act and the Deepfakes Accountability Act have been suggested to criminalize the offense.

“Deepfakes can sow confusion and perpetuate fraud,” Robert Weissman, the president of Public Citizen, which has petitioned for aggressive action against deepfakes, said in a statement after an AI-generated audio clip of President Joe Biden asking people to not vote spread in New Hampshire.

Caution in passing Deepfake laws

While the misuse of AI and Deepfake technology has been present for decades its rampant use in recent years has become a pressing issue. Affected families and researchers have been alarming lawmakers about the ill effects of the tech that creates explicit child abuse content.

I really hope that Taylor can sue whoever created those awful images. There need to be safeguards in place for ethical AI that deep fake p*rn should not be able to be generated.

— Rafranz ⁷ (@rafranzdavis) January 26, 2024

People have also continued to argue caution in implementing laws. American Civil Liberties Union, The Electronic Frontier Foundation, and The Media Coalition, which represents publishers, and movie studios have asked for consideration to avoid proposals that can affect the First Amendment.

While Attorney for the ACLU of New Jersey Joe Johnson has suggested that some concerns about the abusive use of AI and deepfakes can be tackled under the existing cyber harassment laws.